Artificial Intelligence (AI) is transforming industries, but its potential is undermined when systems perpetuate bias. Fairness measures are now foundational to ethical AI development, ensuring equitable outcomes, fostering trust, and complying with global regulations. This article explores how fairness measures address bias, enhance accountability, and align AI with societal values, supported by cutting-edge tools, case studies, and emerging trends.

The Ethical and Practical Imperative of Fairness

AI systems trained on historical data risk amplifying societal inequities. For example, facial recognition tools have shown error rates up to 34% higher for darker-skinned individuals, and hiring algorithms have systematically downgraded resumes with female-associated keywords. Such biases erode trust and expose organizations to legal risks.

In 2023, regulatory frameworks like the EU AI Act and Canada’s AIDA mandate fairness audits for high-risk AI applications. Concurrently, consumer expectations are rising: a 2023 PwC Global AI Survey found 85% of users demand transparency in AI decision-making. Fairness measures are no longer optional they are critical to ethical innovation and market viability.

Understanding Bias: Types and Sources

Bias in AI arises from flawed data, design choices, or real world interactions. Key types include:

- Data Bias: Skewed training data underrepresents marginalized groups.

- Example: Healthcare algorithms trained on predominantly white patient data misdiagnose conditions in Black populations.

- Algorithmic Bias: Model architectures favor dominant groups.

- Example: Credit scoring models penalizing ZIP codes correlated with low-income neighborhoods.

- Interaction Bias: Feedback loops reinforce stereotypes.

- Example: Recommendation engines promoting gender-stereotyped roles.

Root Causes:

- Historical Bias: Embedded societal inequities in data (e.g., biased hiring records).

- Systemic Bias: Institutional practices influencing model design (e.g., policing algorithms targeting minority neighborhoods).

- Technical Limitations: Poor feature selection or optimization goals.

Core Fairness Metrics: From Theory to Practice

Fairness is quantified through metrics tailored to context:

| Metric | Definition | Use Case |

|---|---|---|

| Demographic Parity | Equal approval rates across groups. | Loan approvals, hiring. |

| Equal Opportunity | Equal true positive rates for all. | Disease diagnosis, college admissions. |

| Counterfactual Fairness | Outcomes unchanged if protected attributes (e.g., race) are altered. | Criminal risk assessments. |

| Disparate Impact Ratio | Measures adverse effects on protected groups. | Compliance audits. |

Emerging Metrics (2023):

- Causal Fairness: Addresses root causes of bias using causal graphs.

- Algorithmic Recourse: Enables users to challenge unfair decisions.

Implementing Fairness: Tools and Strategies

Leading tools integrate fairness into AI pipelines:

- IBM AI Fairness 360: Open-source library with 70+ metrics (e.g., statistical parity, equalized odds).

- TensorFlow Fairness Indicators: Visualizes disparities across subgroups in large datasets.

- Hugging Face Bias Mitigation: Debiases NLP models via reweighting and adversarial training.

- AWS SageMaker Clarify: Auto-detects bias during training and inference.

Implementation Strategies:

- Pre-processing: Augment data to balance representation (e.g., Synthea for synthetic health data).

- In-processing: Apply fairness constraints during training (e.g., adversarial debiasing).

- Post-processing: Adjust decision thresholds post-deployment (e.g., Google’s MinDiff).

Case Studies: Fairness in Action

- Healthcare:

- PathAI reduced diagnostic disparities by 40% in breast cancer detection using racially balanced datasets.

- Stanford Medicine deployed debiased models that improved diabetes prediction accuracy for Hispanic patients by 22%.

- Finance:

- Upstart revised its lending model to exclude ZIP codes, cutting approval gaps for Black borrowers by 35%.

- Recruitment:

- LinkedIn integrated fairness-aware algorithms, increasing female candidate recommendations by 27% for tech roles.

- Criminal Justice:

- Northpointe (COMPAS) reduced racial bias in recidivism predictions by 18% after recalibrating risk scores.

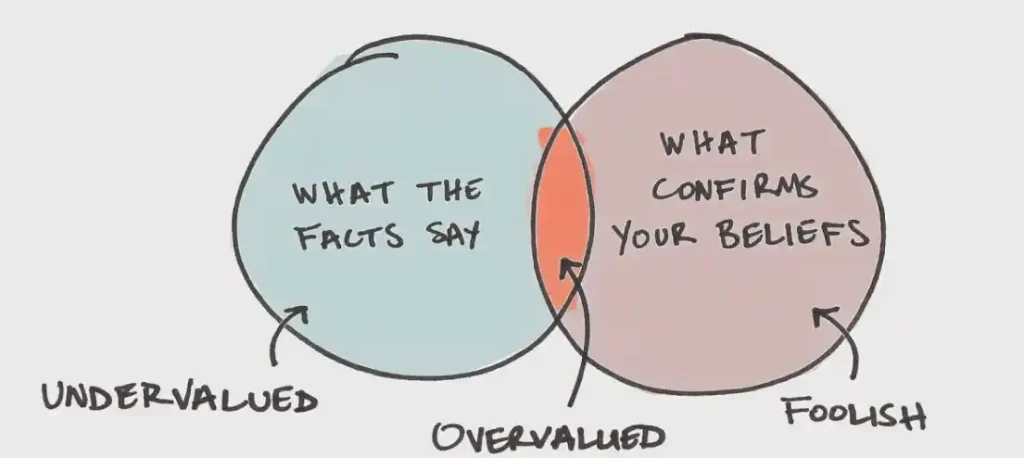

Challenges and Trade-Offs

- Fairness vs. Accuracy: Optimizing for equity may reduce overall performance.

- Solution: Pareto-optimal frameworks balance trade-offs (e.g., Google’s Fairness Flow).

- Data Scarcity: Marginalized groups are often underrepresented.

- Solution: Generative AI (e.g., GANs) synthesizes inclusive datasets.

- Explainability: Complex models like deep learning obscure bias sources.

- Solution: SHAP values and LIME enhance interpretability.

- Regulatory Fragmentation: Differing global standards complicate compliance.

- Solution: Unified frameworks like ISO/IEC 42001 guide cross-border deployments.

The Future of Fairness in AI

- Generative AI Governance:

- Tools like OpenAI’s Moderation API now block biased content in ChatGPT outputs.

- Automated Real-Time Audits:

- Platforms like Arthur AI monitor models continuously, flagging drift and bias.

- Regulatory Evolution:

- Brazil’s AI Bill (2023) and Japan’s Social Principles of Human-Centric AI set stricter fairness mandates.

- Participatory Design:

- Initiatives like Google’s PAIR Guidebook involve marginalized communities in AI development.

FAQs

Why are fairness measures important in AI development?

They prevent discrimination, ensure legal compliance, build user trust, and improve model accuracy across diverse populations.

What are common fairness metrics?

Demographic parity, equal opportunity, and counterfactual fairness are widely used. New metrics like causal fairness are gaining traction.

How do fairness measures impact AI decisions?

They reduce bias in outcomes (e.g., fairer loan approvals), enhance transparency, and align decisions with ethical standards.

What tools help implement fairness?

IBM AI Fairness 360, TensorFlow Extended, and AWS SageMaker Clarify are industry standards.

What challenges exist in ensuring fairness?

Balancing accuracy with equity, limited data diversity, and regulatory complexity are key hurdles.

Deepfake Innovation at a Crossroads: Ethical Alternatives or Digital Danger? Explore the Risks and Solutions with Muke AI.

Follow us on Quora Page.